Testing our code is very important. Obviously we want to make sure that our code works as advertised.

Automated testing is useful because it can remove many hours of manual testing from our development process. When we are evolving code, frequently changing it to add or update features, we need to test our code. To be thorough we should retest everything after each change, but the only way to be efficient at this is to have automated tests that can retest literally everything in just moments.

Testing is even more crucial when our code has to be very secure or very reliable, as is the case with smart contracts. Code deployed to the blockchain is immutable and publicly executable. We only get one chance to deploy safe, reliable and secure code to the blockchain, so we better make sure it works: bugs and unexpected loopholes in our code can result in exploits and potentially the loss of millions.

In this series of three blog posts I will demonstrate effective automated testing techniques to test your TEAL smart contracts before deploying them to the Algorand blockchain.

Here’s what’s coming up:

- Part 1:

- Discussion of aims and consideration for automated testing.

- Presents the example TEAL smart contract we’ll be testing in this series.

- Setting up your Algorand Sandbox.

- Manually deploying and testing the contract.

- We talk about the setup for testing, the Algorand Sandbox and why having a custom Sandbox can be useful.

- Part 2:

- Presents a method of automated testing for TEAL smart contracts against an actual Algorand Sandbox, including how to verify that a transaction was recorded and that required state changes have been made.

- Discussion of the language of testing and how declarative and expressive tests can really help to write robust and conclusive tests.

- Part 3:

- Presents a method of automated testing TEAL smart contracts against a simulated Algorand virtual machine (AVM). This executes much faster than testing against an actual Algorand Sandbox.

- Discussion of defensive testing and how it can help bullet-proof your code.

Automated testing for smart contracts is tricky, but the recipes that I present in this series help make it a bit easier.

Getting the code

The code that accompanies this series of blog posts is available on GitHub:

https://github.com/hone-labs/teal-testing-example

The code repository contains a simple TEAL smart contract that increments a counter, which I’ll refer to now as the teal-counter. Once you deploy the smart contract to the blockchain you can invoke “methods” on it to increment or decrement the counter. We’ll do this at the end of this part of the blog post series. The teal-counter is a trivial and somewhat meaningless example, but it’s enough to demonstrate important automated testing techniques.

You can download a zip file of the code through this link:

https://github.com/hone-labs/teal-testing-example/archive/refs/heads/main.zip

Or you can use Git to clone the code repository:

git clone https://github.com/hone-labs/teal-testing-exampleTo run the tests and deployment process, you must have Node.js installed, which you can download here:

Why test?

Did I make the case for testing yet? I hope my brief words at the start did, but just in case let me make it clearer.

Testing is not optional. As a developer, testing your code and being confident that it works is part of your job.

You have to understand that the default state of code is to be broken. There are many more ways for code to be broken than for it to accidentally (i.e. luckily) turn out to be working. It’s almost impossible for anyone to write working code without having tested it and probably also having first had to fix a few issues.

Testing is even more essential when it comes to smart contracts. This code is immutable and we only have one real shot at deployment to Mainnet. Sure, with Algorand we could delete the contract and redeploy or we could update the contract, but allowing those operations on our smart contracts doesn’t exactly install trust in us, so often these operations are not allowed.

Once our code is released to the blockchain anyone can invoke it and we must assume that we will immediately be attacked on every possible front. If there are bugs or loopholes in our code they will be found. Testing (even really obscure edge cases) is the only chance we have of finding problems before the attackers do.

Why automated testing?

Automated testing, of course, is optional, but it can be very effective. Most code evolves significantly during development. Each time you change your code you risk adding new bugs and new loopholes that you might not notice. Any feature you add, any feature you update, any code that you refactor - these are all opportunities that will most likely break your code.

The only way to know for sure is to thoroughly retest your code after each and every change.

Of course, the problem with manual testing is that we often forget to do it thoroughly, or even worse we are just lazy and bank the testing so that we can forget to do it later.

Automated testing solves this problem. It can be expensive and difficult to create automated tests, but given that we can then reuse our tests time after time to cheaply and thoroughly test our code, it means that automated tests will quickly pay for themselves for testing code that is evolving frequently or even just for code that you must prove is secure and reliable.

Automated testing gives us confidence in our code. This is a huge and probably understated benefit that can help free up our minds to solve bigger problems. We don’t have to struggle to remember the last time that we tested our code manually, it’s just not something that’s weighing on our minds, because we can simply run our automated tests at any moment to instantly tell us if our code is working or not.

Automated testing gives us freedom to move quickly and break things. We can move quickly and when we inevitably cause problems our early warning system, automated testing, makes it obvious. I hope through this series of blog posts you’ll see that automated testing is not as difficult as you might have thought.

Automated Testing aims

Let’s consider our aims with automated testing. What are we trying to achieve with it?

Well, the ability to run our tests automatically is one thing. For example, each time we push our code to GitHub we can have GitHub Actions automatically run our test pipeline and email us if any developer has pushed a breaking change.

Code that is deterministic or functional is highly prized. Each automated test exercises a feature or an aspect of our code automatically. It verifies that our code is deterministic. That is to say that our code behaves the same way and has the same output each and every time that we run it.

Automated testing is also a form of communication or documentation. The tests that you write show how our code is supposed to work and, possibly more importantly, how it’s not supposed to work. We can be very successful with testing by creating a language in which we can write expressive tests - I’ll explain that in part two of this series.

We should use automated testing not just to exercise the happy path or our code (that path we hope our users will take), we should also test that our code can fail gracefully in the face of erroneous or even malicious use - I call this defensive testing and we’ll talk about it in part three of this series.

Testing considerations

When thinking of how to test a given project we must first ask and answer some questions.

Where will we deploy our code? We’ll deploy our code to a sandbox Algorand blockchain. That’s our own personal blockchain node that we can use anyway we like. More about that in a moment.

How will we deploy our code? We’ll use the Algorand JavaScript sdk, algosdk, that is available on npm.

How will we invoke “methods” on our smart contract? Again we’ll use algosdk.

Before we set up our sandbox blockchain and deploy our smart contract, let’s understand the structure of the teal-counter example project.

The structure of the project

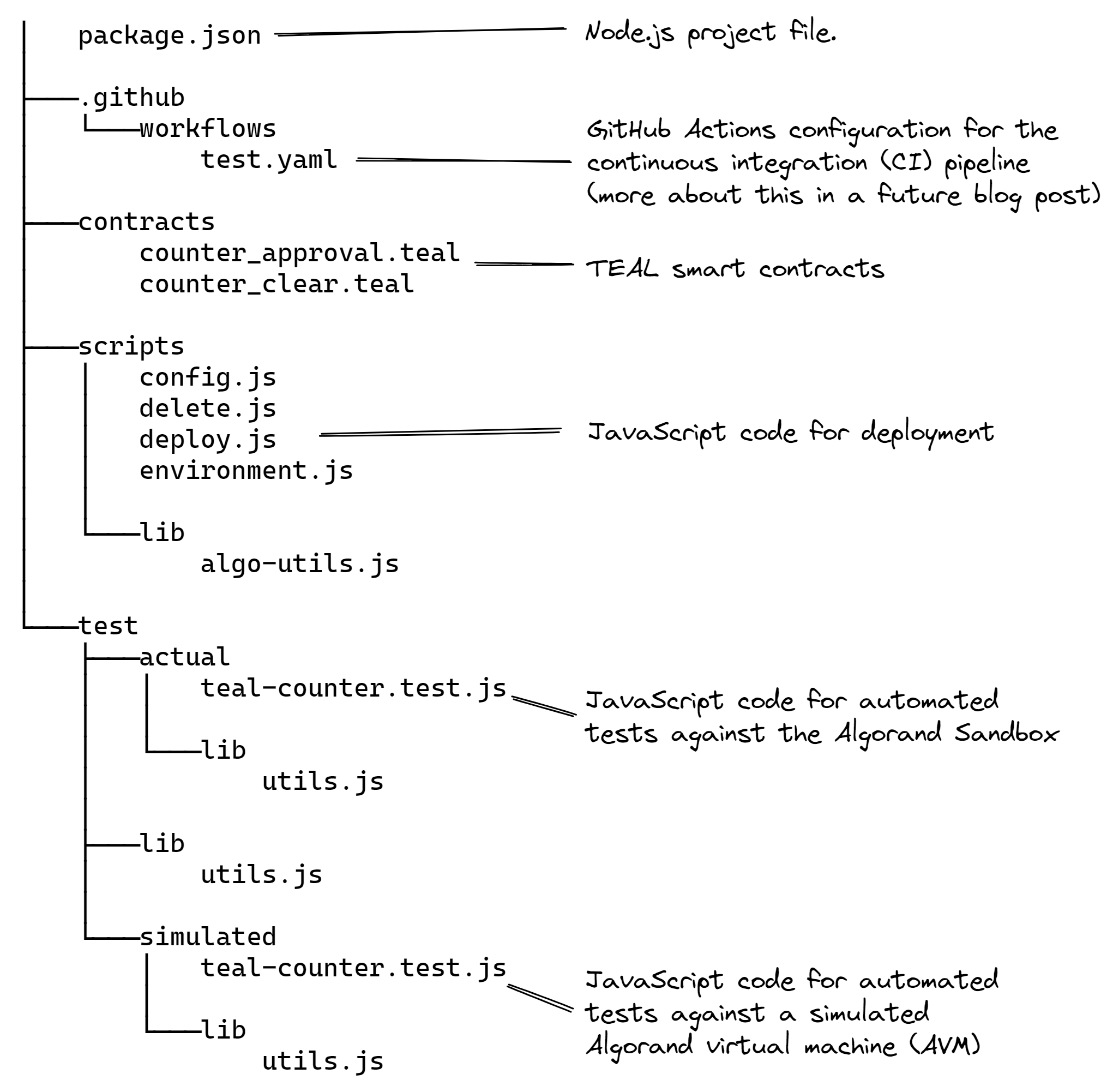

The example project is a Node.js project with subdirectories for smart contracts, deployment and testing code.

The smart contracts are written in the TEAL language. The deployment and testing code are written in JavaScript.

The following diagram shows the layout of the project:

The smart contract

Presented here is a small extract of the TEAL smart contract code for the teal-counter. This is the code that implements one of the “methods”, the increment “method”, of the contract:

call_increment:

byte "counterValue"

byte "counterValue"

app_global_get

int 1

+

app_global_put

b success

This code simply adds one to the variable counterValue in the contract’s global state. It isn’t very exciting, but it gives us something we can test.

Please see GitHub to look at the complete smart contract:

https://github.com/hone-labs/teal-testing-example/blob/main/contracts/counter_approval.teal

Why JavaScript?

At this point you might be wondering why the deployment code and testing pipeline are implemented in JavaScript. The language we use in production at Hone is actually TypeScript, but to keep things simple for this example and for a larger audience, here we are using JavaScript.

To put it simply, we use JavaScript for our automated testing because JavaScript is what we use for everything else. We use it for our frontend coding and we also use it for our backend coding (on Node.js).

JavaScript is the only language that is truly fullstack and so we prefer to use it across our stack and avoid the cognitive penalty that is applied when changing languages when moving between frontend and backend.

Using JavaScript for automated testing feels natural to us, but we also feel privileged in the JavaScript ecosystem to be able to use such great testing tools and in this blog post we’ll be using Jest to run our automated tests.

Deployment and testing setup

To get setup to run the teal-counter project you‘ll need Node.js installed. Download or clone the code repository as shown earlier.

Now, in your terminal, change directory into the project:

cd teal-testing-exampleThen install dependencies for the project:

npm installRunning npm install downloads the Jest testing framework, algosdk and a couple of other libraries we’ll use later.

Sandbox blockchain setup

Now for the tricky part.

To test our TEAL smart contract we need an actual Algorand blockchain node with an Algorand virtual machine (AVM) that is capable of running our smart contract. This is possibly the most difficult part of working through this series of blog posts. If you can get through this section, the rest of it should be easy (and, spoiler alert, in part 3 I’ll show you a way you can avoid doing this setup).

The simplest way to run an Algorand node is using the the official Algorand Sandbox, available here:

https://github.com/algorand/sandbox

You start the Sandbox with a shell script that boots up a playground Algorand blockchain running under Docker and Docker Compose. To run the sandbox up you’ll need Docker Desktop installed.

Unfortunately the official sandbox doesn’t work directly under Windows, because Windows doesn’t support shell scripts. If you are on Windows and want an easier time (or if you just prefer to use docker compose up instead of their shell scripts), please see the section below: A custom sandbox.

Before trying to run the deployment, check the file scripts/environment.js to make sure the settings are correct for your sandbox:

const localSandboxConfiguration = {

token: "aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa",

host: "http://localhost",

port: "4001"

};

module.exports = localSandboxConfiguration;These are the defaults, so they should work for you unless you have changed the configuration of your sandbox.

Choose a creator account

When we start our Algorand Sandbox it will most likely contain several accounts / wallets.

To deploy our smart contract we must choose an account in the Sandbox to use as the contract’s "creator" account. We must create a shell into our Algod container and find the mnemonic (kind of like a long password) for that account.

Getting a shell into the Algod Docker container is straightforward if you know the name of the container. By default you can do it like this:

docker exec -it algorand-sandbox-algod bashWhen you are in the Algod container, list all accounts like this:

goal account listThen pick an account and copy its address. You can export the account’s mnemonic like this:

goal account export -a <address>Now exit the Algod container shell, then back in your normal terminal, set the following environment variable:

export CREATOR_MNEMONIC=<mnemonic_for_the_creator_account>This sets the creator account for the deployment code.

Under Windows you must use set instead of export, like this:

set CREATOR_MNEMONIC=<mnemonic_for_the_creator_account>The creator account should already have funds allocated to pay the fees for deployment of the smart contract. By default the accounts you have in the sandbox should have funds allocated, if you create a new account though, you’ll have to transfer funds to it first if you want to then use it as the creator account.

Note that the accounts/mnemonics within your sandbox will change each time you rebuild your sandbox! Just running docker compose up again should keep the same accounts, which will save you having to look up the account/mnemonic again. But the next time you run docker compose up --build (because you want to reconfigure your sandbox or get the latest Algorand code) the accounts will be recreated and they will have different addresses and mnemonics.

This is kind of annoying because it makes it difficult to run a continuous integration pipeline with automated testing when you can’t rely on accounts with consistent mnemonics. See how we deal with this at Hone in the section below: A custom sandbox.

Deploying the contract

Before getting to automated testing we should first be able to test our code manually. You can think of manual testing as a necessary stepping stone to automated testing: if you can’t do this manually you won’t have anything that you can automate!

Once you’ve created your sandbox, then checked the configuration in environment.js and set your CREATOR_MNEMONIC environment variable, you are now ready to do a trial run of deploying the teal-counter smart contract to your sandbox.

Invoke the deployment code like this:

npm run deployFor a successful deployment you should see some output like this:

> [email protected] deploy

> node scripts/deploy.js

Deployed:

- Contract id: 1, address: WCS6TVPJRBSARHLN2326LRU5BYVJZUKI2VJ53CAWKYYHDE455ZGKANWMGMThe output shows you the id of the application (id number 1 in this case because it’s the first contract deployed to my new sandbox instance) and the address of the smart contract.

Invoking npm run deploy runs the code in scripts/deploy.js. Here’s an extract of that code:

const creatorMnemonic = process.env.CREATOR_MNEMONIC;

if (!creatorMnemonic) {

throw new Error(`Please set CREATOR_MNEMONIC to the mnemonic for the Algorand account that creates the smart contract.`);

}

const creatorAccount = algosdk.mnemonicToSecretKey(creatorMnemonic);

const algodClient = new algosdk.Algodv2(environment.token,

environment.host, environment.port);

// Deploy the smart contract.

const { appId, appAddr } =

await deployTealCounter(algodClient, creatorAccount, 0);

console.log(`Deployed:`);

console.log(`- Contract id: ${appId}, address: ${appAddr}`);This code uses algosdk (the Algorand JavaScript SDK) and some helper functions like deployTealCounter to deploy the teal-counter smart contract to the sandbox.

See the full code for deploy.js here: https://github.com/hone-labs/teal-testing-example/blob/main/scripts/deploy.js

My single biggest tip for deployment: have one function that wraps up the deployment configuration for your smart contract and then use that function every time you do deployment. In this case that function is called deployTealCounter. You can think of this as part of the “software development kit” (SDK) for this application.

If you have used the same deployment function for local testing in your sandbox, you can be confident that the same code is tested and will work when you go live on Testnet or Mainnet.

Having a “single source of truth” for your deployment code means that every test run of your smart contract is like a rehearsal for your ultimate real deployment on mainnet.

See the code for other helper functions here:

https://github.com/hone-labs/teal-testing-example/blob/main/scripts/lib/algo-utils.js

Invoking methods on the contract

Now that we have deployed our smart contract we are ready to invoke its “methods”.

Invoke this command to increment the counter:

npm run invoke-increment -- <app-id>Just replace <app-id> with the id of the app from the last section.

And this command to decrement it:

npm run invoke-decrement -- <app-id>Use this command to show the value of the counter variable after each change:

npm run show-globalsThe output will look something like this:

.-----------------------------.

| Globals |

|-----------------------------|

| Name | Type | Value |

|--------------|------|-------|

| counterValue | 2 | 1 |

'-----------------------------'

A custom sandbox

At Hone, we created our own custom sandbox based on the official Algorand Sandbox. This is completely optional, but having your custom sandbox does make a few things easier.

To start with, the official sandbox doesn’t support Windows very well. They require the use of shell scripts that don’t work in the Windows terminal. And for anyone else (on Linux and MacOS) who would simply prefer to be able to start their sandbox with a simple invocation of docker compose up, having your own sandbox is the way to go, especially if there’s other customizations you’d like to apply.

Reverse engineering our own sandbox was a necessary educational experience for us. We wanted to deploy the Algorand Sandbox to our cloud-based Kubernetes cluster (I might cover this in a future blog post). To do that we needed to understand how to create our own Dockerfiles for Algod and the Indexer. So I pulled apart the official shell scripts to learn how they work.

After creating our own custom sandbox we were bothered by constantly having to update our deployment and testing configuration with the new mnemonics that were generated each time we rebuilt our sandbox. To fix this we embedded a custom genesis file into our sandbox. This allows us to have a fixed and unchanging faucet account with a known mnemonic and a huge amount of funds. This can then be used to fund whatever other test accounts we create (I’ll show you how to do that in part two of this series). For this reason alone, having a reliable and consistent faucet account in your sandbox, I believe it’s worthwhile to maintain your own custom sandbox.

You can find the code for the Hone custom sandbox here:

https://github.com/hone-labs/algorand-sandbox

“Dev mode” vs “normal mode“

One more thing our custom sandbox does is it defaults to “dev mode”. Don’t do what we did and accidentally work with a “normal mode” sandbox for months before discovering how much faster “dev mode” is (thanks to Jason Weathersby for pointing out dev mode to us).

When using the official sandbox you should start it like this:

./sandbox up devIf you have your own custom sandbox it’s a configuration change in the network template and genesis file. There isn’t a huge amount of difference between these files in normal and dev mode, mostly it seems to be that the DevMode field is set to true.

Dev mode is so much faster for testing because it creates just one block for each transaction instead of trying to squeeze multiple transactions into every block.

If you thought dev mode was all roses you are wrong, unfortunately it also enables a change in the way the Algorand node’s timestamp is computed. The knock on effect is that the timestamp for a long lived sandbox goes bad very quickly. So you can’t test TEAL code that depends on the timestamp in a dev mode sandbox. It’s a massive problem and we are waiting for the issue to be corrected.

Conclusion

This has been part one of a series of blog posts on automated testing for Algorand smart contracts.

I’ve made the case for testing, we looked at setting up our Algorand Sandbox to deploy our smart contract and we discussed using a custom sandbox to be more effective. In parts two and three we’ll learn how to run automated tests against our smart contract.

If you liked this blog post please let me know and I can write more in the future about continuous integration, continuous delivery and production deployments for smart contracts.

About the author

Ashley Davis is a software craftsman and author. He is VP of Engineering at Hone and currently writing Rapid Fullstack Development and the 2nd Edition of Bootstrapping Microservices.

Follow Ashley on Twitter for updates.

About Hone

Hone solves the capital inefficiencies present in the current Algorand Governance model where participating ALGO must remain in a user’s account to be eligible for rewards, eliminating the possibility for it to be used as collateral in Algorand DeFi platforms or as a means to transfer value on the Algorand network. With Hone, users can capture governance-reward yield while using their principal–and the yield generated– by depositing ALGO into Hone and receiving a tokenized derivative called dALGO. They can then take dALGO and use it as collateral in DeFi or simply transfer value on-chain without sacrificing governance rewards.